The Internet of Things (IoT) is one of the hottest mega-trends in technology – and for good reason , IoT deals with all the components of what we consider web 3.0 including Big Data Analytics, Cloud Computing, and Mobile Computing .

The Challenge

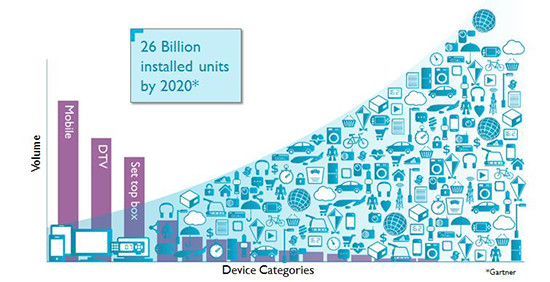

The IoT promises to bring the connectivity to an earthly level, permeating every home, vehicle, and workplace with smart, Internet-connected devices. But as dependence on our newly connected devices increases along with the benefits and uses of a maturing technology, the reliability of the gateways that make the #IoT a functional reality must increase and make up time a near guarantee. As every appliance, light, door, piece of clothing, and every other object in your home and office become potentially Internet-enabled; The Internet of Things is poised to apply major stresses to the current internet and data center infrastructure. Gartner predicts that the IoT may include 26 billion connected units by 2020.

To illustrate the need for smart processing of some kind of data, IDC estimates that the amount of data analyzed on devices that are physically close to the Internet of Things is approaching 40 percent, which supports the urgent need for a different approach to this need.

The Solution

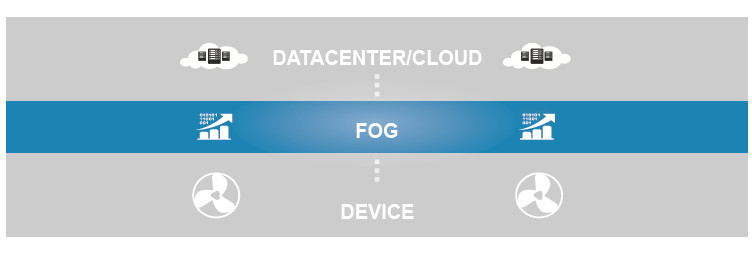

To deal with this challenge, Fog Computing is the champion.

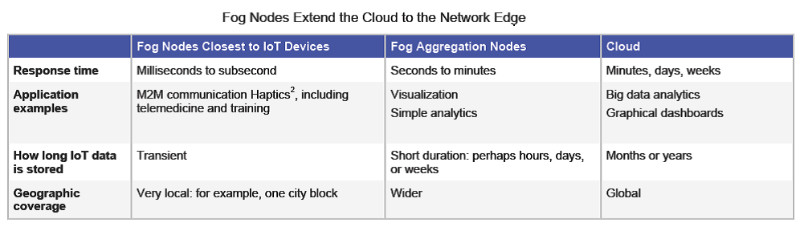

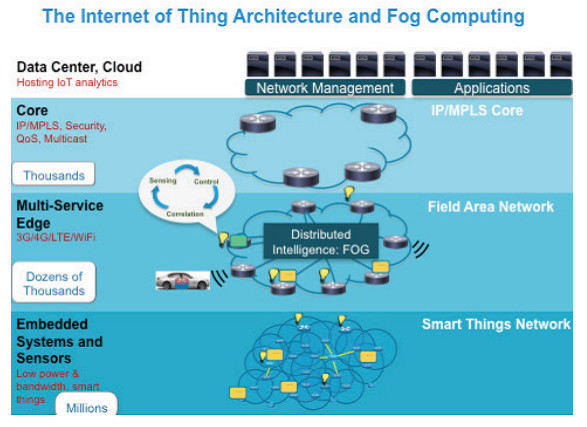

Fog computing allows computing, decision-making and action-taking to happen via IoT devices and only pushes relevant data to the cloud, Cisco coined the term “Fog computing “and gave a brilliant definition for #FogComputing: “The fog extends the cloud to be closer to the things that produce and act on IoT data. These devices, called fog nodes, can be deployed anywhere with a network connection: on a factory floor, on top of a power pole, alongside a railway track, in a vehicle, or on an oil rig. Any device with computing, storage, and network connectivity can be a fog node. Examples include industrial controllers, switches, routers, embedded servers, and video surveillance cameras.”

To understand Fog computing concept, the following actions define fog computing:

- Analyzes the most time-sensitive data at the network edge, close to where it is generated instead of sending vast amounts of IoT data to the cloud.

- Acts on IoT data in milliseconds, based on policy.

- Sends selected data to the cloud for historical analysis and longer-term storage.

Benefits of using Fog Computing

- Minimize latency

- Conserve network bandwidth

- Address security concerns at all level of the network

- Operate reliably with quick decisions

- Collect and secure wide range of data

- Move data to the best place for processing

- Lower expenses of using high computing power only when needed and less bandwidth

- Better analysis and insights of local data

Keep in mind that fog computing is not a replacement of cloud computing by any measure, it works in conjunction with cloud computing, optimizing the use of available resources. But it was the product of a need to address two challenges, real-time process and action of incoming data, and limitation of resources like bandwidth and computing power, another factor helping fog computing is the fact that it takes advantage of the distributed nature of today’s virtualized IT resources. This improvement to the data-path hierarchy is enabled by the increased compute functionality that manufacturers are building into their edge routers and switches.

Real-Life Example:

A traffic light system in a major city is equipped with smart sensors. It is the day after the local team won a championship game and it’s the morning of the day of the big parade. A surge of traffic into the city is expected as revelers come to celebrate their team’s win. As the traffic builds, data are collected from individual traffic lights. The application developed by the city to adjust light patterns and timing is running on each edge device. The app automatically makes adjustments to light patterns in real time, at the edge, working around traffic impediments as they arise and diminish. Traffic delays are kept to a minimum, and fans spend less time in their cars and have more time to enjoy their big day.

After the parade is over, all the data collected from the traffic light system would be sent up to the cloud and analyzed, supporting predictive analysis and allowing the city to adjust and improve its traffic application’s response to future traffic anomalies. There is little value in sending a live steady stream of everyday traffic sensor data to the cloud for storage and analysis. The civic engineers have a good handle on normal traffic patterns. The relevant data is sensor information that diverges from the norm, such as the data from parade day.

The Dynamics of Fog Computing

Fog computing thought of as a “low to the ground” extension of the cloud to nearby gateways and proficiently provides for this need. As Gartner’s Networking Analyst, Joe Skorupa puts it: “The enormous number of devices, coupled with the sheer volume, velocity, and structure of IoT data, creates challenges, particularly in the areas of security, data, storage management, servers and the data center network with real-time business processes at stake. Data center managers will need to deploy more forward-looking capacity management in these areas to be able to proactively meet the business priorities associated with IoT.”

For data handling and backhaul issues that shadow the IoT’s future, fog computing offers a functional solution. Networking equipment vendors proposing such a framework, envisions the use of routers with industrial-strength reliability, running a combination of open Linux and JVM platforms embedded with vendor’s own proprietary OS. By using open platforms, applications could be ported to IT infrastructure using a programming environment that’s familiar and supported by multiple vendors. In this way, smart edge gateways can either handle or intelligently redirect the millions of tasks coming from the myriad sensors and monitors of the IoT, transmitting only summary and exception data to the cloud proper.

Fog Computing and Smart Gateways

The success of fog computing hinges directly on the resilience of those smart gateways directing countless tasks on the internet teeming with IoT devices. IT resilience will be a necessity for the business continuity of IoT operations, with the following task to ensure that success:

- Redundancy

- Security

- Monitoring of power and cooling

- Failover solutions in place to ensure maximum uptime

According to Gartner, every hour of downtime can cost an organization up to $300,000. The speed of deployment, cost-effective scalability, and ease of management with limited resources are also chief concerns.

Conclusion

Moving the intelligent processing of data to the edge only raises the stakes for maintaining the availability of these smart gateways and their communication path to the cloud. When the IoT provides methods that allow people to manage their daily lives, from locking their homes to checking their schedules to cooking their meals, gateway downtime in the fog computing world becomes a critical issue. Additionally, resilience and failover solutions that safeguard those processes will become even more essential.

Ahmed Banafa Named No. 1 Top Voice To Follow in Tech by LinkedIn in 2016

Read more articles at IoT Trends by Ahmed Banafa