› Forums › IoTStack › Discussions (IoTStack) › AI-powered and energy efficient edge devices: Mobilenet on SMT32 MCUs

Tagged: AIAnalytics_H13, ConnectivityTech_S8, EdgeFog_G7, Tech_G15

- This topic has 1 voice and 0 replies.

-

AuthorPosts

-

-

June 9, 2019 at 5:57 am #32792

#Discussion(IoTStack) [ via IoTForIndiaGroup ]

Today, major vendors are offering dozens of cloud services through their online platforms to elaborate and store personal data. On the other side of the spectrum, technology for edge devices still presents several limitations. For instance, any of you who would like to place a wireless camera in front of his house gate, shall most likely deal with the absence of a close power plug or a battery pack that need to be replaced often if streaming images continuously. The ultimate goal of an always-onfunctionality is then strongly related with energy constraints.

To break this barrier, the driving keyword is being smart: objects at the edge should behave as small brains and efficiently perform data-to-information conversion, with a clear impact in data traffic reduction, hence huge savings on the energy side.

Energy-efficient smartness pose two main challenges when meeting Deep Learning (DL) approaches, as expressed by the following questions:

Energy-efficient smartness pose two main challenges when meeting Deep Learning (DL) approaches, as expressed by the following questions:1. Which deep learning model best fits the available resources of our energy-constrained device and how we can efficiently run the model on it? Can we fine-tune the model to favorite the implementation on the target architecture without degrading the accuracy metrics? 2. Can we improve the edge device architecture to efficiently support a given DL model work load?

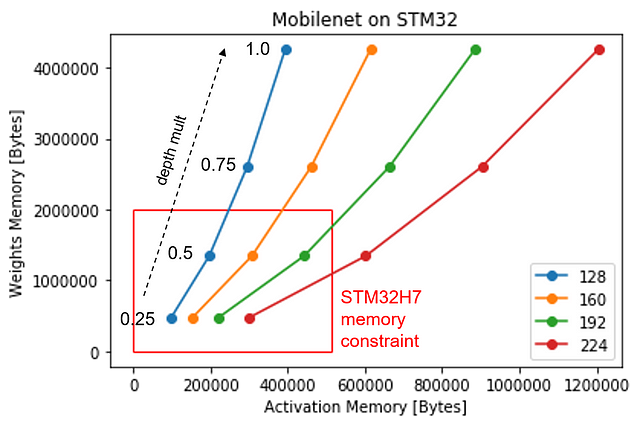

We argue there is not a right order to answer these questions. On the contrary, working concurrently on both sides leads to the best result. In this post, we will describe how recent deep learning models, already trained and optimized for energy-efficiency, can be mapped on a edge device such as a STM32H7 MCU thanks to our optimized CMSIS-NN library extensions.

So, we downloaded a 8-bit quantized Mobilnet 160_0.25 from TensorFlow and we ported into an STM32H7 by using our library extension. Result: a 1000-classes classification task can run in 165msec (65M cycles at 400MHz). if you want to know more, you can check our github repo. More insight on the topic will come soon. Stay tuned!

-

-

AuthorPosts

- You must be logged in to reply to this topic.